Despite what people might think, the biggest challenge to the fully-autonomous future is not technical or scientific, but in fact, societal. According to a survey from the American Automobile Association (AAA), 88% of the American adult population say that they would never trust riding in a self-driving car. It’s easy to dismiss this lack of trust as ignorance on the part of the American consumer, but it’s crucial that the automotive industry addresses consumer concerns with new developments in technology. Driving is quite safe overall—the US sees about one death per 100 million miles travelled.

Some estimates suggest that in order to directly compare autonomous vehicle safety with that of non-autonomous vehicles, one would need to test drive self-driving cars for billions of miles, a prohibitively costly endeavour. If the autonomous vehicle industry wants to convince consumers that self-driving cars are safe, the industry needs to find a way to prove cars can see and perform even better than humans by demonstrating the robustness and redundancy of the technology, not driving billions of miles.

Though autonomous research has come far, there are still a few technical hurdles to clear. Multiview camera perception can provide an important supplement to the systems currently available on the market, and bolster consumer confidence.

Inferencing in ADAS and autonomy

Many of the autonomous features in development rely on systems that use machine learning patterns to infer which objects are in a scene. There are two important forms of inferencing used in autonomous vehicles today. Object detection uses inferencing to find objects in the road, and categorises them. When provided with clear, rich data, platforms can use inferencing to identify familiar objects in the road with high accuracy. There’s a greater margin of error allowed when it comes to object detection than for object distance. For example, if a system infers that an object in the road is a truck when it’s actually a sedan, the passengers are at a lower risk, because both sedans and trucks are objects a vehicle should avoid hitting.

Object distance is the other key form of inferencing. Nearly all camera systems used in autonomous solutions to determine distance use inferencing, or machine learning, to make an estimate. Inferencing for object distance can be a greater problem, as the vehicle will need to slow down or speed up, and miscalculating the difference of even a few meters could result in a crash. In this case, miscalculating distance and mistaking a sedan for a truck can be disastrous, as they are different sizes and the system could assume the sedan is further away than it actually is.

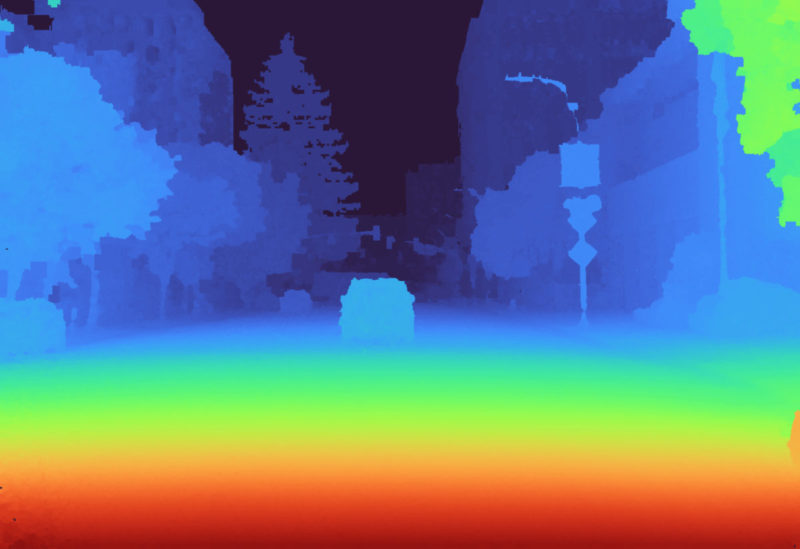

All inferencing-based systems have a long-tail of edge and corner cases that the system won’t be familiar with for years (if ever) meaning the error rate will be high. The missing link in camera-based systems on the market is measuring actual, physical depth to align with the visual information. By implementing a physics-based perception platform that measures distance, vehicles will not need to infer object distance, resulting in better object detection and safer driving. There are valuable tools on the market today that take a physics-based approach to determine measured distance, such as LiDAR, radar, and ultrasound These technologies have made great strides, but there are some gaps, including cost, power, range, reliability, and redundancy.

Implementing multiple modalities for redundancy and range

The most prevalent technology in the autonomous vehicle industry is LiDAR, a sensing system that uses lasers to measure the distance to objects. LiDAR data is sometimes fused with camera data, and the fused result is fed to an inferencing system that determines objects in the scene (i.e. car, truck, bicycle, pedestrian, dog). This LiDAR + camera approach has gotten the industry quite far and paved the way for self-driving cars, but the drawbacks include relatively low effective range—with limitations of 200-250 metres—and problems when operating in rain and fog and dealing with different kinds of reflective surfaces.

Regarding the range issues of current systems, consider that two vehicles approaching each other at 70mph have a stopping distance of about 75 meters, not including system reaction time. With only 150 meters of buffer space, vehicles have a very small window of time to swerve or brake the vehicle to safety. If only one car brakes, that time is greatly reduced, and all of this assumes two equal size vehicles on dry pavement. Those distances can more than quadruple for a semi-truck on a wet road. Having only 200 to 250 meters of depth and vision is simply not enough. If the vehicle’s sole form of sensing is a system that can only see 200 meters ahead, that could result in a deadly collision.

Equipping an autonomous vehicle with a full stack of sensing systems—combining LiDAR, radar, and ultrasonic sensors with multiview perception—is the only way to ensure that the vehicle will be able to capture depth at a variety of distances, with multiple redundancies.

Benefits of multiview perception

Multiview perception is the key to demonstrating to consumers that autonomous vehicles can see and perform better than the average human. For example, unlike humans, multiview systems can identify an unfamiliar object in the distance, capture the precise distance from the vehicle, and stop in time. These systems capture high-resolution, accurate, robust data from the surrounding scenes, and are able to better and more reliably identify objects.

Additionally, multiview perception systems are able to capture depth, so they can make the distinction between a moose and a photo of a moose, which will limit the number of crashes and costly damage to vehicles. They also can be used alongside LiDAR to provide redundancy for additional safety or extend the vehicle’s range of vision up to 1,000 meters, so that vehicles can easily adjust course when obstacles like roadwork, accidents, or other obstructions happen down the road.

What’s more, multiview perception systems can provide these rich results at a fraction of the cost of other systems, due to the off-the-shelf components, bringing the cost of an autonomous vehicle down significantly, and making it a more accessible purchase for consumers.

The improved depth, accuracy, and redundancy of multiview systems are also the keys to unlocking consumer trust. The average consumer doesn’t trust self-driving vehicles. And in the development of any kind of technology, it is pivotal to keep the end-user in mind. What will ensure that their car is driving safely? What will ensure they feel safe in their vehicle? Vehicles that see the way they do, in a way they can understand. The human body is built with two eyes that report to one neural network. With this ‘system’ they navigate traffic, slow down to a stop, and park their car. So what’s stopping autonomous vehicles from driving the same way?

Multiview technology uses two or more cameras to determine the distance to objects through a process called parallax. By combining RGB data with depth values, multiview systems are available to render the scene, in real-time, with photorealistic accuracy. With sophisticated algorithms, the system can determine the exact geometry and depth across the entire optical field of view so they see the way humans do, only better.

Rather than create platforms that replace the current options available today, developers will need to combine a number of modalities in order to generate redundancies and ensure the safety of passengers and drivers. Only then will it possible to truly achieve fully autonomous driving.

About the author: Dave Grannan is Chief Executive Officer of Light